It has been several months since my last post on

classification tree models, because two things have been

consuming all of my spare time. The

first is that I taught a night class for the University of Connecticut Tampere ,

Finland

Specifically, Professor Frank Harrell, the developer of the

extremely useful Hmisc package, asked the following:

How did you take into account model uncertainty? The uncertainty resulting from data mining to find nodes and thresholds for continuous predictors has a massive impact on confidence intervals for estimates from recursive partitioning.

The short answer is that model uncertainty was not accounted

for in the results I presented last time, primarily because – as Professor

Harrell’s comments indicate – this is a complicated issue for tree-based models. The primary objective of this post and the

next few is to discuss this issue.

So first, what exactly is model uncertainty? Any time we fit an empirical model to data,

the results we obtain inherit some of the uncertainty present in the data. For the specific example of linear regression

models, the magnitude of this uncertainty is partially characterized by the

standard errors included in the results returned by R’s summary() function. This magnitude depends on both the

uncertainty inherent in the data and the algorithm we use to fit the

model. Sometimes – and classification

tree models are a case in point – this uncertainty is not restricted to

variations in the values of a fixed set of parameters, but it can manifest

itself in substantial structural variations.

That is, if we fit classification tree models to two similar but not

identical datasets, the results may differ in the number of terminal nodes, the

depths of these terminal nodes, the variables that determine the path to each

one, and the values of these variables that determine the split at each intermediate

node. This is the issue Professor

Harrell raised in his comments, and the primary point of this post is to present

some simple examples to illustrate its nature and severity.

In addition, this post has two other objectives. The first is to make amends for a very bad

practice demonstrated in my last two posts.

Specifically, the classification tree models described there were fit to

a relatively large dataset and then evaluated with respect to that same dataset. This is bad practice because it can lead to

overfitting, a problem that I will discuss in detail in my next post. (For a simple example that illustrates this

problem, see the discussion in Section 1.5.3 of Exploring Data in Engineering, the Sciences, and Medicine.)

In the machine learning community, this issue is typically addressed by

splitting the original dataset randomly into three parts: a training subset

(Tr) used for model-fitting, a validation subset (V) used for intermediate

modeling decisions (e.g., which variables to include in the model), and a test

subset (Te) used for final model evaluation.

This approach is described in Section 7.2 of The Elements of Statistical Learning by Hastie, Tibshirani, and Friedman, who suggest 50%

training, 25% validation, and 25% test as a typical choice.

The other point of this post is to say something about the

different roles of model uncertainty and data uncertainty in the practice of

predictive modeling. I will say a little

more at the end, but whether we are considering business applications like

predicting customer behavior or industrial process control applications to

predict the influence of changes in control valve settings, the basic

predictive modeling process consists of three steps: build a prediction model;

fix (i.e., “finalize”) this model; and apply it to generate predictions from

data not seen in the model-building process.

In these applications, model uncertainty plays an important role in the

model development process, but once we have fixed the model, we have eliminated

this uncertainty by fiat. Uncertainty

remains an important issue in these applications, but the source of this

uncertainty is in the data from which the model generates its predictions and

not in the model itself once we have fixed it.

Conversely, as George Box famously said, “all models are wrong, but some

are useful,” and this point is crucial here: if the model uncertainty is great

enough, it may be difficult or impossible to select a fixed model that is good

enough to be useful in practice.

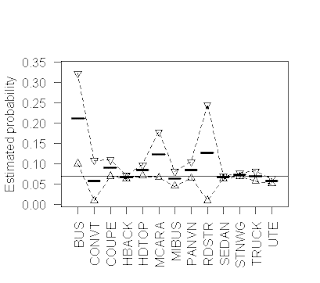

Returning to the topic of uncertainty in tree-based models, the

above plot is a graphical representation of a classification tree model

repeated from my previous two posts. This

model was fit using the ctree procedure in the R package party, taking all

optional parameters at their default values.

As before, the dataset used to generate this model was the Australian

vehicle insurance dataset car.csv, obtained from the website associated with

the book Generalized Linear Models for Insurance Data, by Piet

de Jong and Gillian Z. Heller. This

model – and all of the others considered in this post – was fit using the same

formula as before:

Fmla = clm

~ veh_value + veh_body + veh_age + gender + area + agecat

Each record in this dataset describes a single-vehicle,

single-driver insurance policy, and clm is a binary response variable taking

the value 1 if policy filed one or more claims during the observation period

and 0 otherwise. The other variables (on

the right side of “~”) represent covariates that are either numeric (veh_value,

the value of the vehicle) or categorical (all other variables, representing the

vehicle body type, its age, the gender of the driver, the region where the

vehicle is driven, and the driver’s age).

As I noted above, this model was fit to the entire dataset,

a practice that is to be discouraged since it does not leave independent

datasets of similar character for validation and testing. To address this problem, I randomly

partitioned the original dataset into a 50% training subset, a 25% validation

subset, and a 25% test subset as suggested by Hastie, Tibshirani and Friedman. The plot shown below represents the ctree

model we obtain using exactly the same fitting procedure as before, but applied

to the 50% random training subset instead of the complete dataset. Comparing these plots reveals substantial

differences in the overall structure of the trees we obtain, strictly as a function

of the data used to fit the models. In

particular, while the original model has seven terminal nodes (i.e., the tree

assigns every record to one of seven “leaves”), the model obtained from the

training data subset has only four.

Also, note that the branches in the original tree model are determined

by the three variables agecat, veh_body, and veh_value, while the branches in

the model built from the training subset are determined by the two variables

agecat and veh_value only.

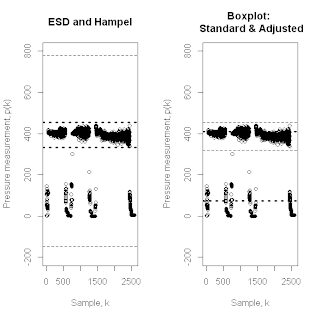

These differences illustrate the point noted above about the

strong dependence of classification tree model structure on the data used in

model-building. One could object that

since the two datasets used here differ by a factor of two in size, the comparison

isn’t exactly “apples-to-apples.” To see

that this is not really the issue, consider the following two cases, based on

the idea of bootstrap resampling. I

won’t attempt a detailed discussion of the bootstrap approach here, but the

basic idea is to assess the effects of data variability on a computational

procedure by applying that procedure to multiple datasets, each obtained by

sampling with replacement from a single source dataset. (For a comprehensive discussion of the

bootstrap and some of its many applications, refer to the book Bootstrap Methods and their Application by A.C. Davison and D.V.

Hinkley.) The essential motivation is

that these datasets – called bootstrap resamples – all have the same essential

statistical character as the original dataset.

Thus, by comparing the results obtained from different bootstrap

resamples, we can assess the variability in results for which exact statistical

characterizations are either unknown or impractical to compute. Here, I use this idea to obtain datasets that

should address the “apples-to-apples” concern raised above. More specifically, I start with the training

data subset used to generate the model described in the previous figure, and I

use R’s built-in sample() function to sample the rows of this dataframe with

replacement. For an arbitrary dataframe

DF, the code to do this is simple:

> set.seed(iseed)

> BootstrapIndex = sample(seq(1,nrow(DF),1),size=nrow(DF),replace=TRUE

> ResampleFrame = DF[BootstrapIndex,]

The only variable in this procedure is the seed for the

random sampling function sample(), which I have denoted as iseed. The extremely complicated figure below shows

the ctree model obtained using the bootstrap resample generated from the

training subset with iseed = 5.

Comparing this model with the previous one – both built from

datasets of the same size, with the same general data characteristics – we see

that the differences are even more dramatic than those between the original

model (built from the complete dataset) and the second one (built from the

training subset). Specifically, while

the training subset model has four terminal nodes, determined by two variables,

the bootstrap subsample model uses all six of the variables included in the

model formula, yielding a tree with 16 terminal nodes. But wait – sampling with replacement

generates a significant number of duplicated records (for large datasets, each

bootstrap resample contains approximately 63.2% of the original data values,

meaning that the other 36.8% of the resample values must be duplicates). Could this be the reason the results are so

different? The following example shows

that this is not the issue.

This plot shows the ctree model obtained from another

bootstrap resample of the training data subset, obtained by specifying iseed =

6 instead of iseed = 5. This second

bootstrap resample tree is much simpler, with only 7 terminal nodes instead of

16, and the branches of the tree are based on only four of the prediction

variables instead of all six (specifically, neither gender nor veh_body appear

in this model). While I don’t include

all of the corresponding plots, I have also constructed and compared the ctree

models obtained from the bootstrap resamples generated for all iseed values

between 1 and 8, giving final models involving between four and six variables,

with between 7 and 16 terminal nodes. In

all cases, the datasets used in building these models were exactly the same

size and had the same statistical character.

The key point is that, as Professor Harrell noted in his comments, the

structural variability of these classification tree models across similar

datasets is substantial. In fact, this

variability of individual tree-based models was one of the key motivations for

developing the random forest method, which achieves substantially

reduced model uncertainty by averaging over many randomly generated trees. Unfortunately, the price we pay for this

improved model stability is a complete loss of interpretibility. That is, looking at any one of the plots

shown here, we can construct a simple description (e.g., node 12 in the above

figure represents older drivers – agecat > 4 – with less expensive cars, and

it has the lowest risk of any of the groups identified there). While we may obtain less variable predictions

by averaging over a large number of these trees, such simple intuitive

explanations of the resulting model are no longer possible.

I noted earlier that predictive modeling applications

typically involve a three-step strategy: fit the model, fix the model, and

apply the model. I also argued that once

we fix the model, we have eliminated model uncertainty when we apply it to new

data. Unfortunately, if the inherent model

uncertainty is large, as in the examples presented here, this greatly

complicates the “fix the model” step. That

is, if small variations in our training data subset can cause large changes in

the structure of our prediction model, it is likely that very different models

will exhibit similar performance when applied to our validation data

subset. How, then, do we choose? I will examine this issue further in my next

post when I discuss overfitting and the training/validation/test split in more

detail.